What is AI bias mitigation, and how can it improve AI fairness?

Algorithmic bias is just one of the AI industry’s most prolific places of scrutiny. Unintended systemic errors threat top to unfair or arbitrary outcomes, elevating the need for standardized moral and responsible technologies — specifically as the AI current market is expected to hit $110 billion by 2024.

There are numerous means AI can turn into biased and build damaging outcomes.

1st is the business processes alone that the AI is being built to increase or switch. If those people processes, the context, and who it is applied to is biased in opposition to specified groups, irrespective of intent, then the resulting AI software will be biased as properly.

Next, the foundational assumptions the AI creators have about the plans of the method, who will use it, the values of those people impacted, or how it will be applied can insert damaging bias. Subsequent, the details set used to teach and appraise an AI method can outcome in harm if the details is not consultant of absolutely everyone it will effect, or if it signifies historical, systemic bias in opposition to particular groups.

Eventually, the model alone can be biased if sensitive variables (e.g., age, race, gender) or their proxies (e.g., title, ZIP code) are factors in the model’s predictions or suggestions. Builders ought to discover in which bias exists in every single of these places, and then objectively audit programs and processes that lead to unfair products (which is easier said than carried out as there are at least 21 distinctive definitions of fairness).

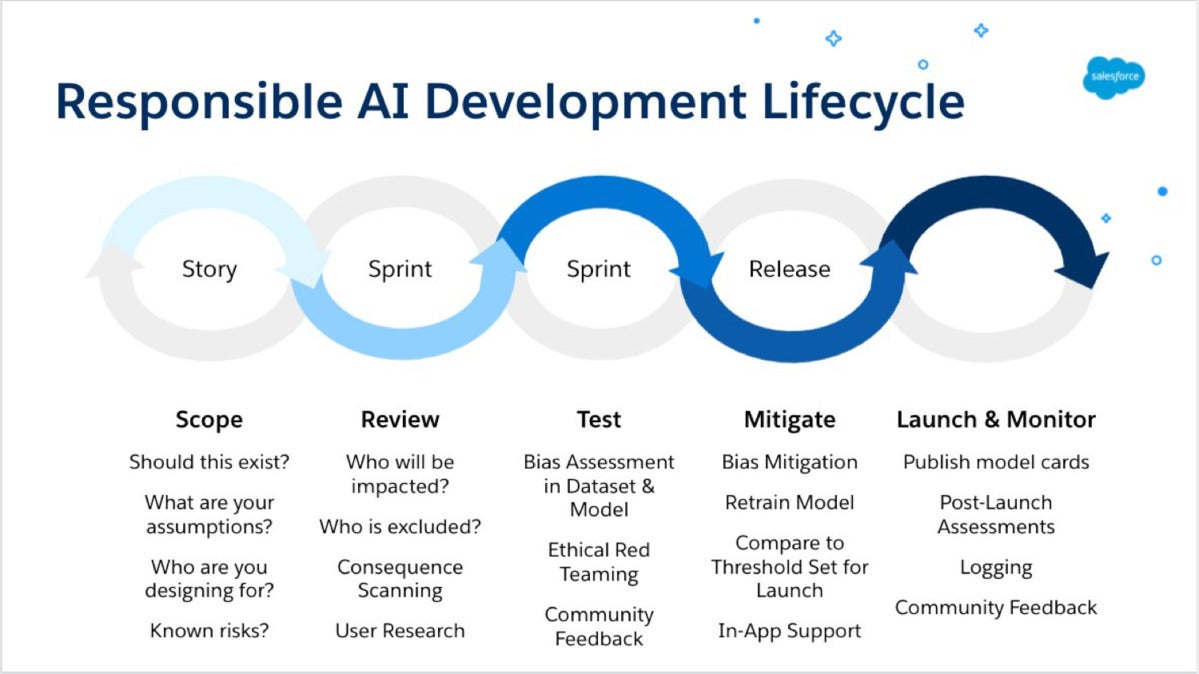

To build AI responsibly, making in ethics by layout in the course of the AI advancement lifecycle is paramount to mitigation. Let us take a glimpse at every single stage.

Salesforce.com

Salesforce.comThe responsible AI advancement lifecycle in an agile method.

Scope

With any technologies project, get started by inquiring, “Should this exist?” and not just “Can we make it?”

We do not want to drop into the trap of technosolutionism — the belief that technologies is the remedy to every trouble or challenge. In the scenario of AI, in individual, just one must ask if AI is the right remedy to achieve the qualified aim. What assumptions are being manufactured about the aim of the AI, about the people who will be impacted, and about the context of its use? Are there any regarded threats or societal or historical biases that could effect the teaching details required for the method? We all have implicit biases. Historical sexism, racism, ageism, ableism, and other biases will be amplified in the AI unless we take explicit actions to deal with them.

But we just cannot deal with bias right until we glimpse for it. That’s the up coming stage.

Assessment

Deep consumer investigate is essential to completely interrogate our assumptions. Who is provided and represented in details sets, and who is excluded? Who will be impacted by the AI, and how? This stage is in which methodologies like consequence scanning workshops and harms modeling occur in. The aim is to discover the means in which an AI method can cause unintended harm by either malicious actors, or by properly-intentioned, naïve ones.

What are the option but valid means an AI could be used that unknowingly results in harm? How can just one mitigate those people harms, specifically those people that may well drop upon the most susceptible populations (e.g., children, aged, disabled, inadequate, marginalized populations)? If it is not feasible to discover means to mitigate the most likely and most severe harms, quit. This is a indication that the AI method being designed must not exist.

Test

There are many open up-resource instruments out there currently to discover bias and fairness in details sets and products (e.g., Google’s What-If Device, ML Fairness Health and fitness center, IBM’s AI 360 Fairness, Aequitas, FairLearn). There are also instruments out there to visualize and interact with details to superior fully grasp how consultant or well balanced it is (e.g., Google’s Facets, IBM AI 360 Explainability). Some of these instruments also incorporate the capability to mitigate bias, but most do not, so be well prepared to acquire tooling for that objective.

Mitigation

There are distinctive means to mitigate harm. Builders may well choose to eliminate the riskiest functionality or incorporate warnings and in-app messaging to give conscious friction, guiding people on the responsible use of AI. Alternatively, just one may well choose to tightly keep an eye on and handle how a method is being used, disabling it when harm is detected. In some instances, this sort of oversight and handle is not feasible (e.g., tenant-particular products in which shoppers make and teach their personal products on their personal details sets).

There are also means to specifically deal with and mitigate bias in details sets and products. Let us examine the process of bias mitigation by way of three distinctive categories that can be launched at different phases of a model: pre-processing (mitigating bias in teaching details), in-processing (mitigating bias in classifiers), and submit-processing (mitigating bias in predictions). Hat idea to IBM for their early operate in defining these categories.

Pre-processing bias mitigation

Pre-processing mitigation focuses on teaching details, which underpins the initially period of AI advancement and is usually in which fundamental bias is likely to be launched. When examining model performance, there may well be a disparate effect going on (i.e., a particular gender being far more or fewer likely to be hired or get a financial loan). Believe of it in phrases of damaging bias (i.e., a lady is capable to repay a financial loan, but she is denied based mostly mostly on her gender) or in phrases of fairness (i.e., I want to make certain I am hiring a harmony of genders).

Humans are intensely included at the teaching details phase, but human beings have inherent biases. The chance of detrimental outcomes will increase with a lack of diversity in the teams responsible for making and applying the technologies. For instance, if a specified group is unintentionally left out of a details set, then routinely the method is putting just one details set or group of men and women at a major disadvantage since of the way details is used to teach products.

In-processing bias mitigation

In-processing strategies make it possible for us to mitigate bias in classifiers while performing on the model. In equipment understanding, a classifier is an algorithm that automatically orders or categorizes details into just one or far more sets. The aim below is to go beyond precision and be certain programs are both reasonable and accurate.

For instance, when a economic establishment is trying to evaluate a customer’s “ability to repay” just before approving a financial loan, its AI method may well forecast someone’s capability based mostly on sensitive or guarded variables like race and gender or proxy variables (like ZIP code, which may well correlate with race). These in-process biases lead to inaccurate and unfair outcomes.

By incorporating a slight modification all through teaching, in-processing strategies make it possible for for the mitigation of bias though also guaranteeing the model is generating exact outcomes.

Put up-processing bias mitigation

Put up-processing mitigation gets to be valuable right after developers have properly trained a model, but now want to equalize the outcomes. At this phase, submit-processing aims to mitigate bias in predictions — modifying only the outcomes of a model rather of the classifier or teaching details.

On the other hand, when augmenting outputs just one may well be altering the precision. For instance, this process may well outcome in hiring less qualified gentlemen if the chosen result is equivalent gender representation, alternatively than applicable ability sets (often referred to as good bias or affirmative motion). This will effect the precision of the model, but it achieves the sought after aim.

Launch and keep an eye on

Once any offered model is properly trained and developers are pleased that it fulfills pre-outlined thresholds for bias or fairness, just one must document how it was properly trained, how the model will work, supposed and unintended use instances, bias assessments conducted by the crew, and any societal or moral threats. This degree of transparency not only aids shoppers rely on an AI it may well be required if functioning in a regulated industry. Luckily for us, there are some open up-resource instruments to support (e.g., Google’s Design Card Toolkit, IBM’s AI FactSheets 360, Open up Ethics Label).

Launching an AI method is hardly ever set-and-fail to remember it requires ongoing checking for model drift. Drift can effect not only a model’s precision and performance but also its fairness. Often exam a model and be well prepared to retrain if the drift gets to be as well great.

Getting AI right

Getting AI “right” is difficult, but far more essential than at any time. The Federal Trade Commission just lately signaled that it may well implement legal guidelines that prohibit the sale or use of biased AI, and the European Union is performing on a lawful framework to regulate AI. Responsible AI is not only good for society, it produces superior business outcomes and mitigates lawful and model threat.

AI will turn into far more prolific globally as new apps are produced to resolve key economic, social, and political issues. Although there is no “one-size-suits-all” solution to creating and deploying responsible AI, the tactics and strategies mentioned in this short article will support in the course of different phases in an algorithm’s lifecycle — mitigating bias to go us closer to moral technologies at scale.

At the stop of the day, it is everyone’s accountability to be certain that technologies is produced with the ideal of intentions, and that programs are in location to discover unintended harm.

Kathy Baxter is principal architect of the moral AI practice at Salesforce.

—

New Tech Forum gives a venue to examine and focus on emerging company technologies in unparalleled depth and breadth. The collection is subjective, based mostly on our decide on of the systems we imagine to be essential and of finest interest to InfoWorld audience. InfoWorld does not acknowledge marketing collateral for publication and reserves the right to edit all contributed content material. Send all inquiries to [email protected].

Copyright © 2021 IDG Communications, Inc.