Robot Self-Calibration Using Actuated 3D Sensors

Present robot calibration tactics depend on specialized machines and specially educated staff. To triumph over this trouble, a the latest paper released on arXiv.org proposes a framework allowing true on-web-site self-calibration of a robot process equipped with an arbitrary eye-in-hand 3D sensor. It employs point cloud registration strategies to fuse a number of scans of a supplied scene.

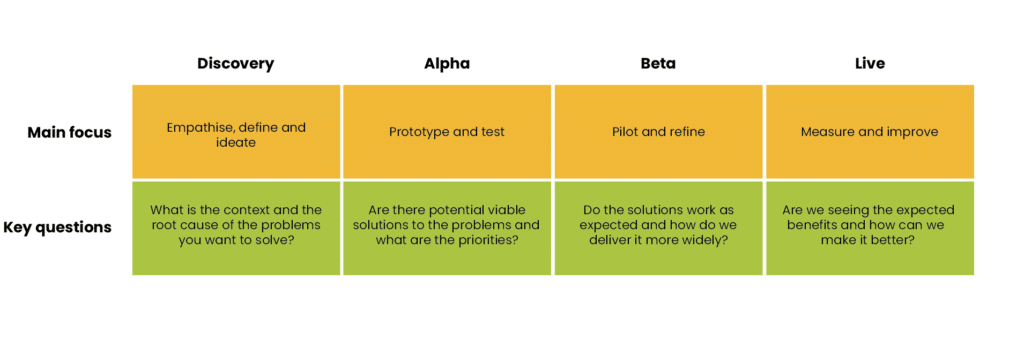

![Image credit: arXiv:2206.03430 [cs.RO]](https://www.technology.org/texorgwp/wp-content/uploads/2022/06/robot-calibration-720x477.jpg)

Graphic credit rating: arXiv:2206.03430 [cs.RO]

The proposed approach is the initially that can address the calibration of an full robotic method by relying only on depth details alternatively of exterior instruments and objects. The method is suitable for any kinematic chain and depth sensor mixture, these as solitary beam LiDARs, line scanners, and depth cameras.

The analysis on various authentic-world scenes on different hardware configurations displays that the attained precision is related to that obtained by making use of regular methods with a devoted 3D monitoring method.

Both, robot and hand-eye calibration haven been object to analysis for many years. Even though current strategies control to precisely and robustly determine the parameters of a robot’s kinematic design, they continue to count on external products, these kinds of as calibration objects, markers and/or external sensors. As a substitute of trying to healthy the recorded measurements to a model of a known item, this paper treats robot calibration as an offline SLAM problem, in which scanning poses are connected to a set point in area by a going kinematic chain. As these kinds of, the presented framework enables robot calibration using nothing at all but an arbitrary eye-in-hand depth sensor, consequently enabling completely autonomous self-calibration without having any exterior resources. My new method is makes use of a modified version of the Iterative Closest Level algorithm to run bundle adjustment on numerous 3D recordings estimating the best parameters of the kinematic design. A in-depth evaluation of the technique is shown on a genuine robot with several attached 3D sensors. The introduced results display that the process reaches precision similar to a dedicated external tracking process at a portion of its cost.

Investigation report: Peters, A., “Robot Self-Calibration Employing Actuated 3D Sensors”, 2022. Website link: https://arxiv.org/stomach muscles/2206.03430