How to bring zero-trust security to microservices

Transitioning to microservices has several positive aspects for teams constructing big applications, specifically those people that should speed up the tempo of innovation, deployments, and time to sector. Microservices also supply know-how teams the option to secure their applications and products and services superior than they did with monolithic code bases.

Zero-have faith in safety offers these teams with a scalable way to make safety idiot-evidence even though managing a increasing variety of microservices and increased complexity. That is right. Whilst it seems counterintuitive at 1st, microservices make it possible for us to secure our applications and all of their products and services superior than we ever did with monolithic code bases. Failure to seize that option will outcome in non-secure, exploitable, and non-compliant architectures that are only heading to come to be a lot more hard to secure in the potential.

Let us recognize why we need zero-have faith in safety in microservices. We will also evaluate a actual-environment zero-have faith in safety illustration by leveraging the Cloud Native Computing Foundation’s Kuma project, a universal service mesh crafted on top of the Envoy proxy.

Safety prior to microservices

In a monolithic software, each and every resource that we produce can be accessed indiscriminately from each and every other resource by using operate phone calls because they are all aspect of the exact same code base. Usually, methods are heading to be encapsulated into objects (if we use OOP) that will expose initializers and features that we can invoke to interact with them and improve their condition.

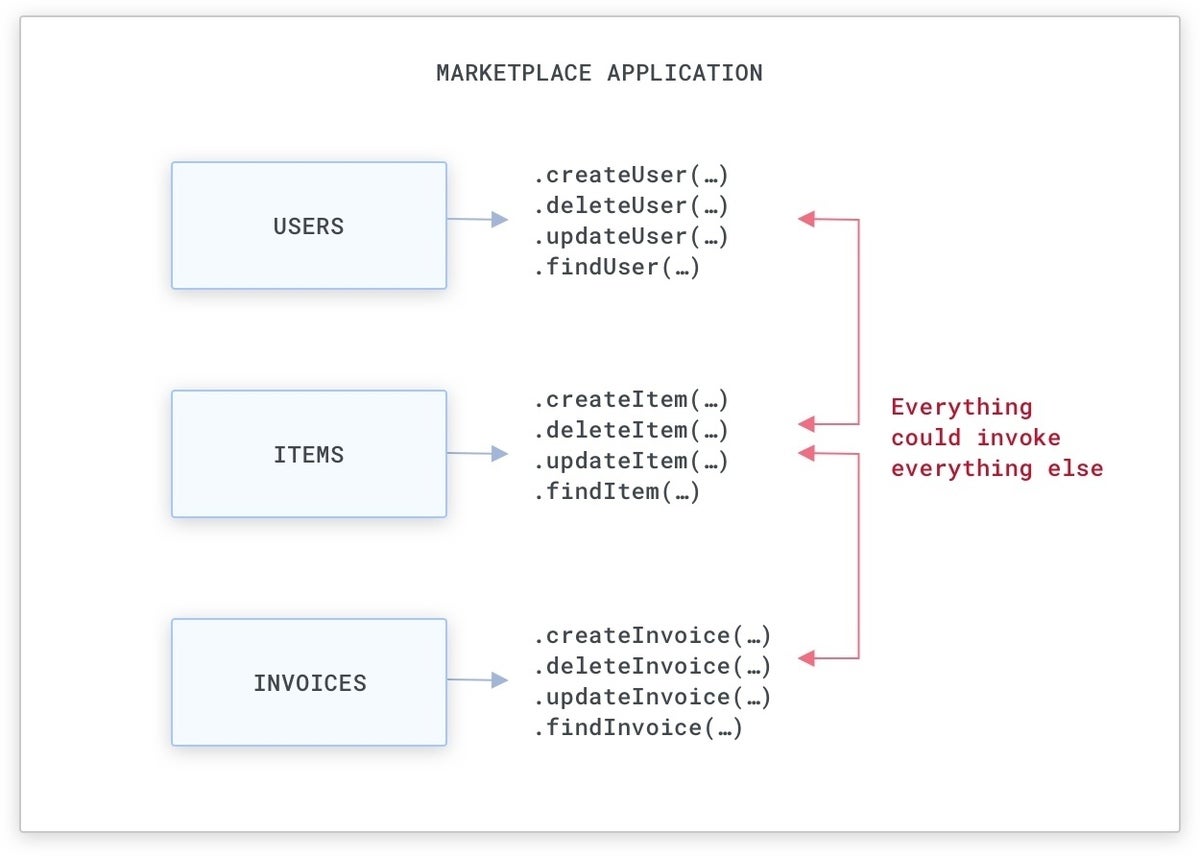

For illustration, if we are constructing a market software (like Amazon.com), there will be methods that identify people and the objects for sale, and that crank out invoices when objects are marketed:

Kong

KongA basic market monolithic software.

Usually, this suggests we will have objects that we can use to possibly produce, delete, or update these methods by using operate phone calls that can be applied from wherever in the monolithic code base. Although there are methods to lower accessibility to sure objects and features (i.e., with community, personal, and protected accessibility-stage modifiers and package deal-stage visibility), commonly these techniques are not strictly enforced by teams, and our safety should not count on them.

Kong

KongA monolithic code base is quick to exploit, because methods can be most likely accessed by wherever in the code base.

Safety with microservices

With microservices, alternatively of having each and every resource in the exact same code base, we will have those people methods decoupled and assigned to unique products and services, with just about every service exposing an API that can be applied by another service. As an alternative of executing a operate simply call to accessibility or improve the condition of a resource, we can execute a community request.

Kong

KongWith microservices our methods can interact with just about every other by using service requests about the community as opposed to operate phone calls in just the exact same monolithic code base. The APIs can be RPC-dependent, Relaxation, or something else genuinely.

By default, this does not improve our circumstance: With no correct boundaries in area, each and every service could theoretically consume the exposed APIs of another service to improve the condition of each and every resource. But because the communication medium has improved and it is now the community, we can use technologies and styles that operate on the community connectivity by itself to established up our boundaries and establish the accessibility concentrations that each and every service should have in the big picture.

Knowledge zero-have faith in safety

To put into action safety principles about the community connectivity among the products and services, we need to established up permissions, and then check out those people permissions on each and every incoming request.

For illustration, we may want to make it possible for the “Invoices” and “Users” products and services to consume just about every other (an bill is always associated with a user, and a user can have several invoices), but only make it possible for the “Invoices” service to consume the “Items” service (since an bill is always associated to an product), like in the next circumstance:

Kong

KongA graphical illustration of connectivity permissions between products and services. The arrows and their course establish regardless of whether products and services can make requests (green) or not (pink). For illustration, the Things service are unable to consume any other service, but it can be eaten by the Invoices service.

Just after placing up permissions (we will check out soon how a service mesh can be applied to do this), we then need to check out them. The part that will check out our permissions will have to establish if the incoming requests are currently being despatched by a service that has been permitted to consume the existing service. We will put into action a check out somewhere along the execution route, one thing like this:

if (incoming_service == “items”)

deny()

else

make it possible for()

This check out can be done by our products and services on their own or by something else on the execution route of the requests, but ultimately it has to occur somewhere.

The biggest problem to solve prior to implementing these permissions is having a dependable way to assign an id to just about every service so that when we identify the products and services in our checks, they are who they assert to be.

Identity is crucial. With no id, there is no safety. Every time we journey and enter a new state, we display a passport that associates our persona with the document, and by carrying out so, we certify our id. Similarly, our products and services also should existing a “virtual passport” that validates their identities.

Since the notion of have faith in is exploitable, we should eliminate all forms of have faith in from our systems—and consequently, we should put into action “zero-trust” safety.

Kong

KongThe id of the caller is despatched on each and every request by using mTLS.

In purchase for zero-have faith in to be carried out, we should assign an id to each and every service occasion that will be applied for each and every outgoing request. The id will act as the “virtual passport” for that request, confirming that the originating service is without a doubt who they assert to be. mTLS (Mutual transportation Layer Safety) can be adopted to supply the two identities and encryption on the transportation layer. Since each and every request now offers an id that can be confirmed, we can then implement the permissions checks.

The id of a service is typically assigned as a SAN (Matter Option Title) of the originating TLS certification associated with the request, as in the case of zero-have faith in safety enabled by a Kuma service mesh, which we will check out soon.

SAN is an extension to X.509 (a conventional that is currently being applied to produce community essential certificates) that allows us to assign a custom made benefit to a certification. In the case of zero-have faith in, the service name will be a person of those people values that is handed along with the certification in a SAN discipline. When a request is currently being gained by a service, we can then extract the SAN from the TLS certificate—and the service name from it, which is the id of the service—and then put into action the authorization checks recognizing that the originating service genuinely is who it statements to be.

Kong

KongThe SAN (Matter Option Title) is pretty typically applied in TLS certificates and can also be explored by our browser. In the picture over, we can see some of the SAN values belonging to the TLS certification for Google.com.

Now that we have explored the significance of having identities for our products and services and we recognize how we can leverage mTLS as the “virtual passport” that is included in each and every request our products and services make, we are however remaining with several open up subject areas that we need to deal with:

- Assigning TLS certificates and identities on each and every occasion of each and every service.

- Validating the identities and checking permissions on each and every request.

- Rotating certificates about time to improve safety and reduce impersonation.

These are pretty tough challenges to solve because they properly supply the backbone of our zero-have faith in safety implementation. If not done accurately, our zero-have faith in safety product will be flawed, and as a result insecure.

Also, the over tasks should be carried out for each and every occasion of each and every service that our software teams are building. In a common group, these service scenarios will involve the two containerized and VM-dependent workloads running across a person or a lot more cloud companies, potentially even in our actual physical datacenter.

The biggest mistake any group could make is asking its teams to build these capabilities from scratch each and every time they produce a new software. The resulting fragmentation in the safety implementations will produce unreliability in how the safety product is carried out, generating the overall procedure insecure.

Services mesh to the rescue

Services mesh is a pattern that implements modern service connectivity functionalities in these kinds of a way that does not involve us to update our applications to consider benefit of them. Services mesh is typically delivered by deploying info airplane proxies upcoming to each and every occasion (or Pod) of our products and services and a regulate airplane that is the resource of truth of the matter for configuring those people info airplane proxies.

Kong

KongIn a service mesh, all the outgoing and incoming requests are automatically intercepted by the info airplane proxies (Envoy) that are deployed upcoming to just about every occasion of just about every service. The regulate airplane (Kuma) is in demand of propagating the procedures we want to established up (like zero-have faith in) to the proxies. The regulate airplane is never on the execution route of the service-to-service requests only the info airplane proxies dwell on the execution route.

The service mesh pattern is dependent on the thought that our products and services should not be in demand of managing the inbound or outbound connectivity. Around time, products and services penned in various technologies will inevitably stop up having a variety of implementations. Hence, a fragmented way to control that connectivity ultimately will outcome in unreliability. Plus, the software teams should concentration on the software by itself, not on managing connectivity since that should ideally be provisioned by the underlying infrastructure. For these motives, service mesh not only gives us all kinds of service connectivity operation out of the box, like zero-have faith in safety, but also tends to make the software teams a lot more economical even though offering the infrastructure architects comprehensive regulate about the connectivity that is currently being produced in just the group.

Just as we didn’t question our software teams to wander into a actual physical info centre and manually hook up the networking cables to a router/change for L1-L3 connectivity, now we really don’t want them to build their individual community management software program for L4-L7 connectivity. As an alternative, we want to use styles like service mesh to supply that to them out of the box.

Zero-have faith in safety by using Kuma

Kuma is an open up resource service mesh (1st produced by Kong and then donated to the CNCF) that supports multi-cluster, multi-location, and multi-cloud deployments across the two Kuberenetes and digital machines (VMs). Kuma offers a lot more than 10 procedures that we can use to service connectivity (like zero-have faith in, routing, fault injection, discovery, multi-mesh, etc.) and has been engineered to scale in big dispersed business deployments. Kuma natively supports the Envoy proxy as its info airplane proxy know-how. Simplicity of use has been a concentration of the project since working day a person.

Kong

KongKuma can run a dispersed service mesh across clouds and clusters — together with hybrid Kubernetes in addition VMs — by using its multi-zone deployment mode.

With Kuma, we can deploy a service mesh that can provide zero-have faith in safety across the two containerized and VM workloads in a one or many cluster setup. To do so, we need to observe these methods:

1. Download and install Kuma at kuma.io/install.

two. Start off our products and services and start out `kuma-dp` upcoming to them (in Kubernetes, `kuma-dp` is automatically injected). We can observe the finding started off guidance on the set up web site to do this for the two Kubernetes and VMs.

Then, as soon as our regulate airplane is running and the info airplane proxies are effectively connecting to it from just about every occasion of our products and services, we can execute the last stage:

3. Empower the mTLS and Visitors Authorization procedures on our service mesh by using the Mesh and TrafficPermission Kuma methods.

In Kuma, we can produce many isolated digital meshes on top of the exact same deployment of service mesh, which is typically applied to help many applications and teams on the exact same service mesh infrastructure. To empower zero-have faith in safety, we 1st need to empower mTLS on the Mesh resource of choice by enabling the mtls assets.

In Kuma, we can make a decision to permit the procedure crank out its individual certification authority (CA) for the Mesh or we can established our individual root certification and keys. The CA certification and essential will then be applied to automatically provision a new TLS certification for each and every info airplane proxy with an id, and it will also automatically rotate those people certificates with a configurable interval of time. In Kong Mesh, we can also discuss to a 3rd-party PKI (like HashiCorp Vault) to provision a CA in Kuma.

For illustration, on Kubernetes, we can empower a builtin certification authority on the default mesh by applying the next resource by using kubectl (on VMs, we can use Kuma’s CLI kumactl):

apiVersion: kuma.io/v1alpha1

type: Mesh

metadata:

name: default

spec:

mtls:

enabledBackend: ca-1

backends:

- name: ca-1

style: builtin

dpCert:

rotation:

expiration: 1d

conf:

caCert:

RSAbits: 2048

expiration: 10y