How to avoid GC pressure in C# and .NET

Rubbish assortment takes place when the method is minimal on offered bodily memory or the GC.Accumulate() process is identified as explicitly in your application code. Objects that are no for a longer time in use or are inaccessible from the root are candidates for garbage assortment.

Whilst the .Net garbage collector, or GC, is adept at reclaiming memory occupied by managed objects, there might be moments when it will come under pressure, i.e., when it have to dedicate more time to gathering this kind of objects. When the GC is under pressure to clean up objects, your application will devote far more time garbage gathering than executing guidance.

Naurally, this GC pressure is detrimental to the application’s general performance. The excellent information is, you can keep away from GC pressure in your .Net and .Net Main applications by following specified best procedures. This article talks about individuals best procedures, employing code examples exactly where applicable.

Be aware that we will be taking edge of BenchmarkDotNet to keep track of general performance of the methods. If you are not acquainted with BenchmarkDotNet, I recommend reading through this article initial.

To get the job done with the code examples delivered in this article, you should really have Visible Studio 2019 installed in your method. If you don’t already have a copy, you can down load Visible Studio 2019 here.

Make a console application venture in Visible Studio

Very first off, let’s develop a .Net Main console application venture in Visible Studio. Assuming Visible Studio 2019 is installed in your method, comply with the measures outlined under to develop a new .Net Main console application venture in Visible Studio.

- Launch the Visible Studio IDE.

- Click on “Create new venture.”

- In the “Create new project” window, decide on “Console Application (.Net Main)” from the list of templates exhibited.

- Click Subsequent.

- In the “Configure your new project” window, specify the identify and spot for the new venture.

- Click Make.

We’ll use this venture to illustrate best procedures for preventing GC pression in the subsequent sections of this article.

Steer clear of huge item allocations

There are two diverse forms of heap in .Net and .Net Main, specifically the little item heap (SOH) and the huge item heap (LOH). Unlike the little item heap, the huge item heap is not compacted in the course of garbage assortment. The explanation is that the price of compaction for huge objects, this means objects increased than 85KB in size, is pretty higher, and moving them all over in the memory would be pretty time consuming.

Therefore the GC never moves huge objects it simply just gets rid of them when they are no for a longer time required. As a consequence, memory holes are fashioned in the huge item heap, creating memory fragmentation. Despite the fact that you could publish your own code to compact the LOH, it is excellent to keep away from huge item heap allocations as considerably as probable. Not only is garbage assortment from this heap expensive, but it is typically more vulnerable to fragmentation, resulting in unbounded memory increases above time.

Steer clear of memory leaks

Not incredibly, memory leaks also are detrimental to application general performance — they can result in general performance issues as perfectly as GC pressure. When memory leaks come about, the objects continue to stay referenced even if they are no for a longer time becoming employed. Given that the objects are are living and stay referenced, the GC encourages them to greater generations alternatively of reclaiming the memory. This kind of promotions are not only expensive but also continue to keep the GC unnecessarily busy. When memory leaks come about, more and more memory is employed, till offered memory threatens to run out. This brings about the GC to do more frequent collections to no cost memory room.

Steer clear of employing the GC.Accumulate process

When you call the GC.Accumulate() process, the runtime conducts a stack stroll to determine which things are reachable and which are not. This triggers a blocking garbage assortment across all generations. Therefore a call to the GC.Accumulate() process is a time-consuming and useful resource-intense operation that should really be prevented.

Pre-size facts constructions

When you populate a assortment with facts, the facts structure will be resized a number of moments. Each resize operation allocates an internal array which have to be filled by the previous array. You can keep away from this overhead by giving the capacity parameter to the collection’s constructor even though creating an occasion of the assortment.

Refer to the following code snippet that illustrates two generic collections — one particular owning preset size and the other owning dynamic size.

const int NumberOfItems = ten thousand

[Benchmark]

community void ArrayListDynamicSize()

ArrayList arrayList = new ArrayList()

for (int i = i < NumberOfItems i++)

arrayList.Include(i)

[Benchmark]

community void ArrayListFixedSize()

ArrayList arrayList = new ArrayList(NumberOfItems)

for (int i = i < NumberOfItems i++)

arrayList.Include(i)

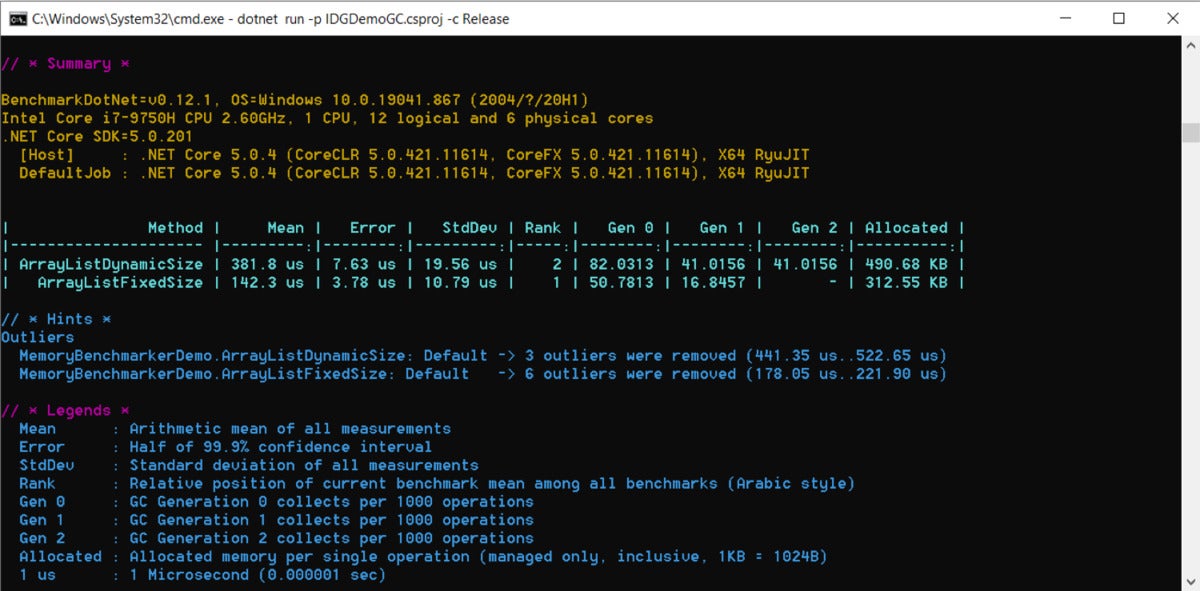

Figure one reveals the benchmark for the two methods.

IDG

IDGFigure one.

Use ArrayPools to lessen allocations

ArrayPool and MemoryPool classes support you to lessen memory allocations and garbage assortment overhead and thereby maximize performance and general performance. The ArrayPool

Consider the following piece of code that reveals two methods — one particular that takes advantage of a typical array and the other that takes advantage of a shared array pool.

const int NumberOfItems = ten thousand

[Benchmark]

community void RegularArrayFixedSize()

int[] array = new int[NumberOfItems]

[Benchmark]

community void SharedArrayPool()

var pool = ArrayPool.Shared

int[] array = pool.Rent(NumberOfItems)

pool.Return(array)

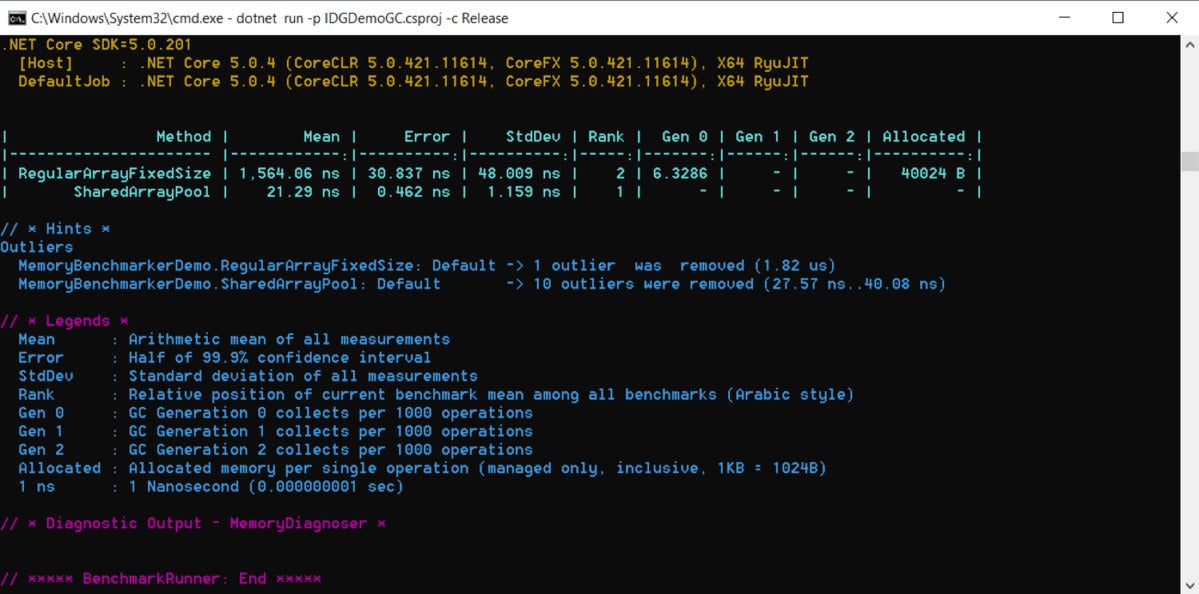

Figure 2 illustrates the general performance differences between these two methods.

IDG

IDGFigure 2.

Use structs alternatively of classes

Structs are worth forms, so there is no garbage assortment overhead when they are not element of a course. When structs are element of a course, they are saved in the heap. An extra reward is that structs have to have a lot less memory than a course simply because they have no ObjectHeader or MethodTable. You should really consider employing a struct when the size of the struct will be negligible (say all over 16 bytes), the struct will be shorter-lived, or the struct will be immutable.

Consider the code snippet under that illustrates two forms — a course named MyClass and a struct named MyStruct.

course MyClass

community int X get set

community int Y get set

community int Z get set

struct MyStruct

community int X get set

community int Y get set

community int Z get set

The following code snippet reveals how you can look at the benchmark for two eventualities, employing objects of the MyClass course in one particular circumstance and objects of the MyStruct struct in yet another.

const int NumberOfItems = a hundred thousand

[Benchmark]

community void UsingClass()

MyClass[] myClasses = new MyClass[NumberOfItems]

for (int i = i < NumberOfItems i++)

myClasses[i] = new MyClass()

myClasses[i].X = one

myClasses[i].Y = 2

myClasses[i].Z = three

[Benchmark]

community void UsingStruct()

MyStruct[] myStructs = new MyStruct[NumberOfItems]

for (int i = i < NumberOfItems i++)

myStructs[i] = new MyStruct()

myStructs[i].X = one

myStructs[i].Y = 2

myStructs[i].Z = three

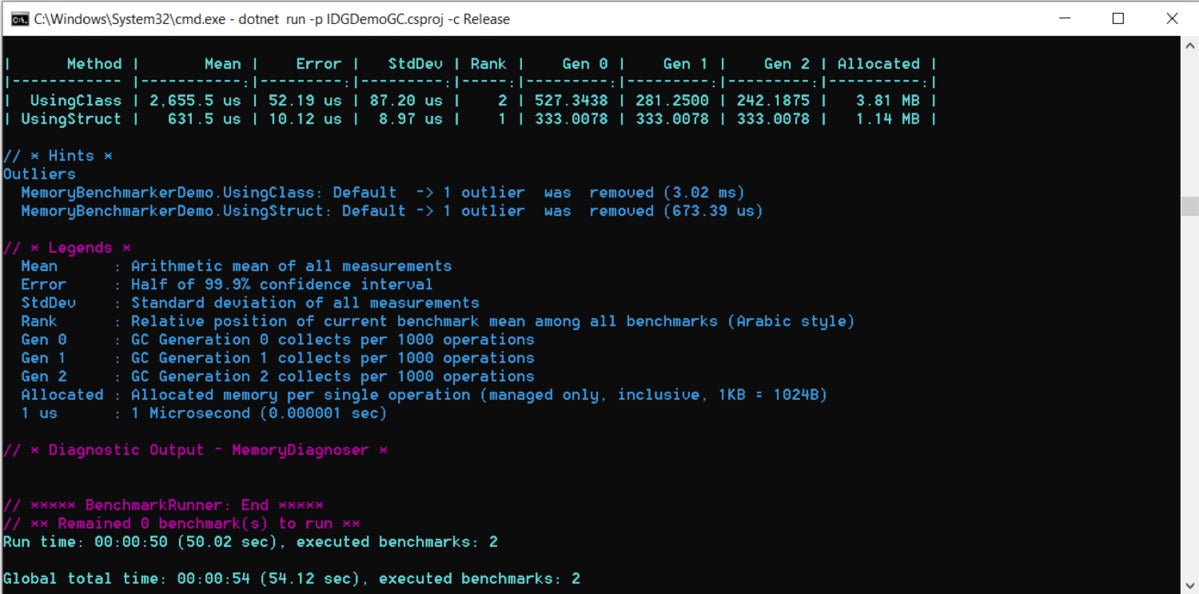

Figure three reveals the general performance benchmarks of these two methods.

IDG

IDGFigure three.

As you can see, allocation of structs is considerably more rapidly compared to classes.

Steer clear of employing finalizers

Each time you have a destructor in your course the runtime treats it as a Finalize() process. As finalization is expensive, you should really keep away from employing destructors and for this reason finalizers in your classes.

When you have a finalizer in your course, the runtime moves objects of that course to the finalization queue. The runtime moves all other objects that are reachable to the “Freachable” queue. The GC reclaims the memory occupied by objects that are not reachable. What’s more, an occasion of a course that contains a finalizer is quickly promoted to a greater generation considering the fact that it can’t be gathered in generation .

Consider the two classes specified under.

course WithFinalizer

community int X get set

community int Y get set

community int Z get set

~WithFinalizer()

course WithoutFinalizer

community int X get set

community int Y get set

community int Z get set

The following code snippet benchmarks the general performance of two methods, one particular that takes advantage of situations of a course with a finalizer and one particular that takes advantage of situations of a course with out a finalizer.

[Benchmark]

community void AllocateMemoryForClassesWithFinalizer()

for (int i = i < NumberOfItems i++)

WithFinalizer obj = new WithFinalizer()

obj.X = one

obj.Y = 2

obj.Z = three

[Benchmark]

community void AllocateMemoryForClassesWithoutFinalizer()

for (int i = i < NumberOfItems i++)

WithoutFinalizer obj = new WithoutFinalizer()

obj.X = one

obj.Y = 2

obj.Z = three

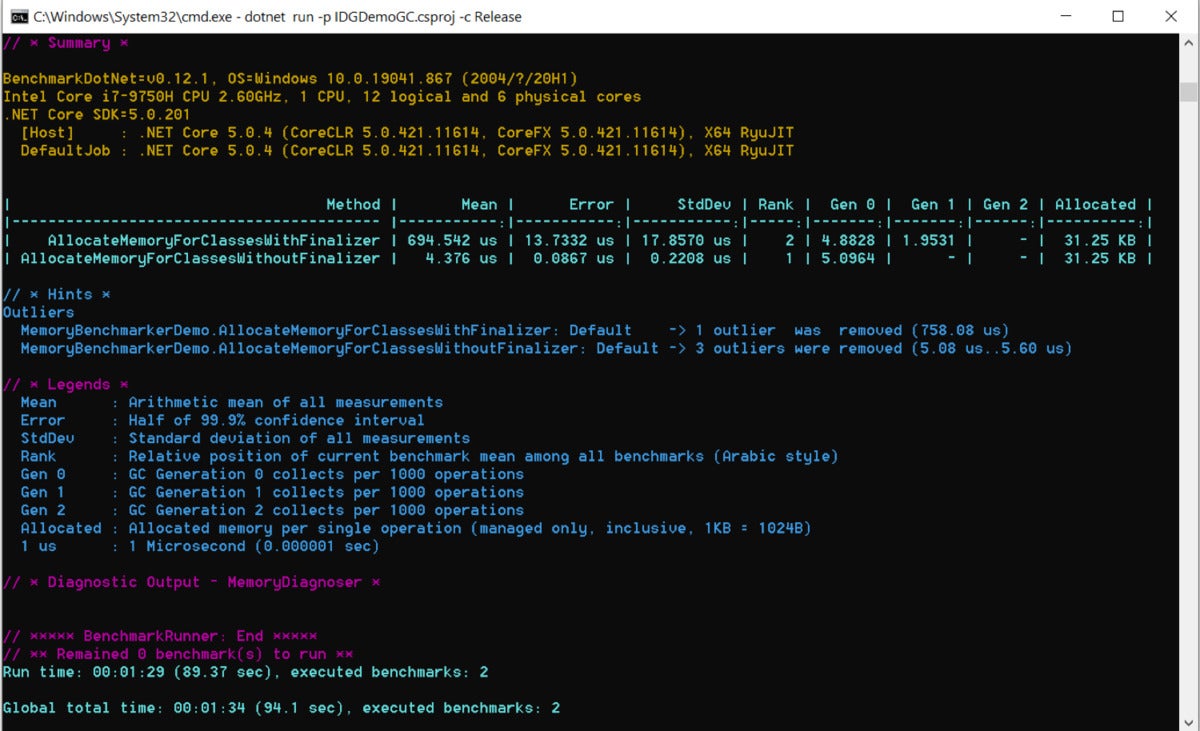

Figure four under reveals the output of the benchmarks when the worth of NumberOfItems equals one thousand. Be aware that the AllocateMemoryForClassesWithoutFinalizer process completes the activity in a fraction of the time the AllocateMemoryForClassesWithFinalizer process usually takes to finish it.

IDG

IDGFigure four.

Use StringBuilder to reduce allocations

Strings are immutable. So anytime you incorporate two string objects, a new string item is designed that retains the material of equally strings. You can keep away from the allocation of memory for this new string item by taking edge of StringBuilder.

StringBuilder will enhance general performance in instances exactly where you make repeated modifications to a string or concatenate many strings together. Having said that, you should really continue to keep in mind that typical concatenations are more rapidly than StringBuilder for a little selection of concatenations.

When employing StringBuilder, notice that you can enhance general performance by reusing a StringBuilder occasion. Yet another excellent practice to enhance StringBuilder general performance is to set the initial capacity of the StringBuilder occasion when creating the occasion.

Consider the following two methods employed for benchmarking the general performance of string concatenation.

[Benchmark]

community void ConcatStringsUsingStringBuilder()

string str = "Good day Environment!"

var sb = new StringBuilder()

for (int i = i < NumberOfItems i++)

sb.Append(str)

[Benchmark]

community void ConcatStringsUsingStringConcat()

string str = "Good day Environment!"

string result = null

for (int i = i < NumberOfItems i++)

result += str

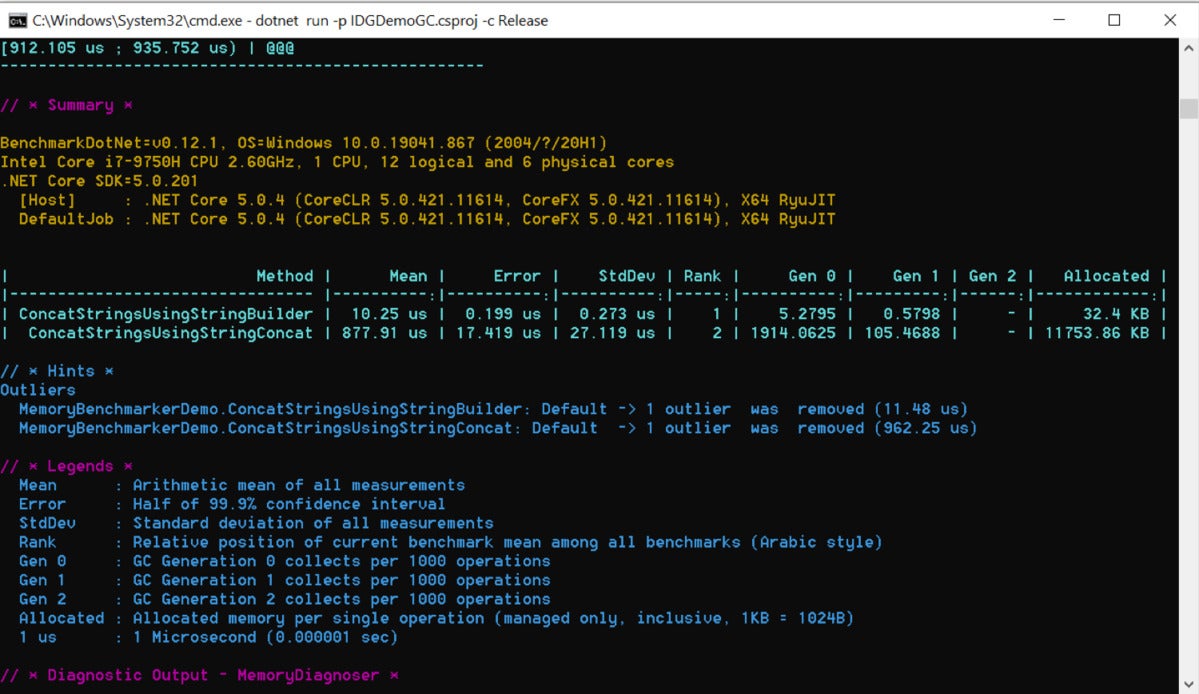

Figure 5 shows the benchmarking report for one thousand concatenations. As you can see, the benchmarks show that the ConcatStringsUsingStringBuilder process is considerably more rapidly than the ConcatStringsUsingStringConcat process.

IDG

IDGFigure 5.

Common policies

There are many strategies to keep away from GC pressure in your .Net and .Net Main applications. You should really launch item references when they are no for a longer time required. You should really keep away from employing objects that have a number of references. And you should really reduce Era 2 garbage collections by preventing the use of huge objects (increased than 85KB in size).

You can reduce the frequency and duration of garbage collections by modifying the heap measurements and by reducing the price of item allocations and promotions to greater generations. Be aware there is a trade-off between heap size and GC frequency and duration.

An maximize in the heap size will reduce GC frequency and maximize GC duration, even though a minimize in the heap size will maximize GC frequency and minimize GC duration. To lessen equally GC duration and frequency, it is suggested that you create short-lived objects as considerably as probable in your application.

Copyright © 2021 IDG Communications, Inc.