Driverless Cars Still Have Blind Spots. How Can Experts Fix Them?

In 2004, the U.S. Office of Protection issued a obstacle: $one million to the to start with staff of engineers to develop an autonomous auto to race across the Mojave Desert.

Although the prize went unclaimed, the obstacle publicized an plan that the moment belonged to science fiction — the driverless automobile. It caught the interest of Google co-founders Sergey Brin and Larry Page, who convened a staff of engineers to get automobiles from dealership plenty and retrofit them with off-the-shelf sensors.

But building the automobiles push on their own was not a very simple process. At the time, the technological innovation was new, leaving designers for Google’s Self-Driving Vehicle Undertaking with no a whole lot of direction. YooJung Ahn, who joined the challenge in 2012, states it was a obstacle to know where by to begin.

“We did not know what to do,” states Ahn, now the head of style and design for Waymo, the autonomous automobile company that was developed from Google’s first challenge. “We had been attempting to figure it out, slicing holes and including issues.”

But more than the previous five a long time, advancements in autonomous technological innovation have manufactured consecutive leaps. In 2015, Google concluded its to start with driverless trip on a general public highway. 3 a long time afterwards, Waymo launched its Waymo One particular journey-hailing support to ferry Arizona travellers in self-driving minivans manned by humans. Previous summer months, the automobiles started selecting up clients all on their own.

Eyes on the Highway

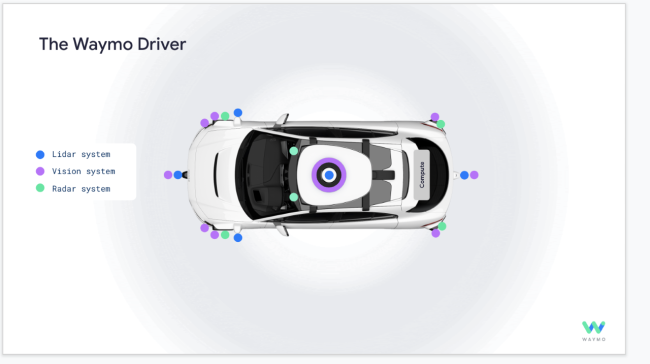

A driverless automobile understands its ecosystem using a few sorts of sensors: lidar, cameras and radar. Lidar makes a a few-dimensional design of the streetscape. It will help the automobile decide the distance, measurement and direction of the objects around it by sending pulses of gentle and measuring how lengthy it normally takes to return.

“Imagine you have a human being a hundred meters away and a complete-measurement poster with a photo of a human being a hundred meters away,” Ahn states. “Cameras will see the identical factor, but lidar can figure out whether it is 3D or flat to determine if it is a human being or a photo.”

Cameras, in the meantime, give the contrast and detail vital for a automobile to browse street indicators and visitors lights. Radar sees through dust, rain, fog and snow to classify objects centered on their velocity, distance and angle.

The a few sorts of sensors are compressed into a domelike structure atop Waymo’s newest automobiles and positioned around the entire body of the auto to capture a complete photo in serious time, Ahn states. The sensors can detect an open automobile doorway a block away or gauge the direction a pedestrian is facing. These modest but vital cues permit the automobile to react to unexpected alterations in its path.

(Credit history: Waymo)

The automobiles are also built to see around other motor vehicles when on the highway, using a 360-diploma, bird’s-eye-check out digicam that can see up to 300 meters away. Assume of a U-Haul blocking visitors, for instance. A self-driving automobile that just cannot see previous it might hold out patiently for it to transfer, leading to a jam. But Waymo’s newest sensors can detect motor vehicles coming in the opposite lane and determine whether it is safe and sound to circumvent the parked truck, Ahn states.

And new large-resolution radar is built to location a motorcyclist from many

soccer fields away. Even in poor visibility disorders, the radar can see the two static and shifting objects, Ahn states. The potential to evaluate the velocity of an approaching auto is practical through maneuvers these kinds of as transforming lanes.

Driverless Race

Waymo is not the only just one in the race to develop trusted driverless automobiles suit for general public roadways. Uber, Aurora, Argo AI, and Normal Motors’ Cruise subsidiary have their own jobs to deliver self-driving automobiles to the highway in important figures. Waymo’s new method cuts the price tag of its sensors in half, which the company states will speed up advancement and help it collaborate with a lot more automobile producers to set a lot more take a look at automobiles on the highway.

However, troubles continue being, as refining the software for entirely autonomous motor vehicles is significantly a lot more complicated than developing the automobiles on their own, states Marco Pavone, director of the Autonomous Programs Laboratory at Stanford University. Instructing a automobile to use humanlike discretion, these kinds of as judging when it is safe and sound to make a remaining-hand switch amid oncoming visitors, is a lot more difficult than developing the physical sensors it makes use of to see.

In addition, he states lengthy-array eyesight may perhaps be vital when traveling in rural areas, but it is not primarily useful in metropolitan areas, where by driverless automobiles are predicted to be in best demand from customers.

“If the Earth had been flat with no obstructions, that would probably be helpful,” Pavone states. “But it is not as practical in metropolitan areas, where by you are generally certain to see just a number of meters in front of you. It would be like possessing the eyes of an eagle but the mind of an insect.”

Editor’s observe: this story has been up to date to replicate the present abilities of Waymo’s I-Speed method.