Could Sucking Up the Seafloor Solve Battery Shortage?

Fortunately for this sort of synthetic neural networks—later rechristened “deep learning” when they included added levels of neurons—decades of

Moore’s Law and other advancements in pc components yielded a approximately ten-million-fold improve in the number of computations that a pc could do in a second. So when researchers returned to deep learning in the late 2000s, they wielded applications equal to the problem.

These extra-impressive pcs made it doable to build networks with vastly extra connections and neurons and as a result larger capability to model complex phenomena. Scientists used that capability to break record right after record as they utilized deep learning to new tasks.

While deep learning’s rise may well have been meteoric, its long run may well be bumpy. Like Rosenblatt prior to them, modern deep-learning researchers are nearing the frontier of what their applications can accomplish. To recognize why this will reshape machine learning, you should to start with recognize why deep learning has been so thriving and what it expenses to continue to keep it that way.

Deep learning is a contemporary incarnation of the long-running pattern in synthetic intelligence that has been shifting from streamlined systems primarily based on professional expertise toward adaptable statistical products. Early AI systems were rule primarily based, implementing logic and professional expertise to derive final results. Later on systems incorporated learning to established their adjustable parameters, but these were ordinarily several in number.

Present day neural networks also learn parameter values, but those people parameters are section of this sort of adaptable pc products that—if they are huge enough—they come to be universal perform approximators, that means they can healthy any type of details. This unrestricted overall flexibility is the motive why deep learning can be utilized to so many different domains.

The overall flexibility of neural networks comes from having the many inputs to the model and owning the community blend them in myriad ways. This means the outputs will never be the outcome of implementing straightforward formulas but as an alternative immensely challenging ones.

For case in point, when the cutting-edge picture-recognition technique

Noisy Pupil converts the pixel values of an picture into chances for what the object in that picture is, it does so applying a community with 480 million parameters. The training to verify the values of this sort of a huge number of parameters is even extra remarkable because it was completed with only one.2 million labeled images—which may well understandably confuse those people of us who try to remember from high university algebra that we are intended to have extra equations than unknowns. Breaking that rule turns out to be the important.

Deep-learning products are overparameterized, which is to say they have extra parameters than there are details factors readily available for training. Classically, this would lead to overfitting, the place the model not only learns basic traits but also the random vagaries of the details it was trained on. Deep learning avoids this entice by initializing the parameters randomly and then iteratively altering sets of them to improved healthy the details applying a approach termed stochastic gradient descent. Incredibly, this procedure has been confirmed to make sure that the learned model generalizes nicely.

The accomplishment of adaptable deep-learning products can be witnessed in machine translation. For decades, computer software has been used to translate textual content from just one language to a further. Early ways to this trouble used guidelines intended by grammar gurus. But as extra textual details grew to become readily available in certain languages, statistical approaches—ones that go by this sort of esoteric names as highest entropy, concealed Markov products, and conditional random fields—could be utilized.

At first, the ways that worked ideal for every single language differed primarily based on details availability and grammatical properties. For case in point, rule-primarily based ways to translating languages this sort of as Urdu, Arabic, and Malay outperformed statistical ones—at to start with. These days, all these ways have been outpaced by deep learning, which has confirmed itself outstanding almost just about everywhere it’s utilized.

So the excellent news is that deep learning offers massive overall flexibility. The lousy news is that this overall flexibility comes at an massive computational value. This unlucky reality has two areas.

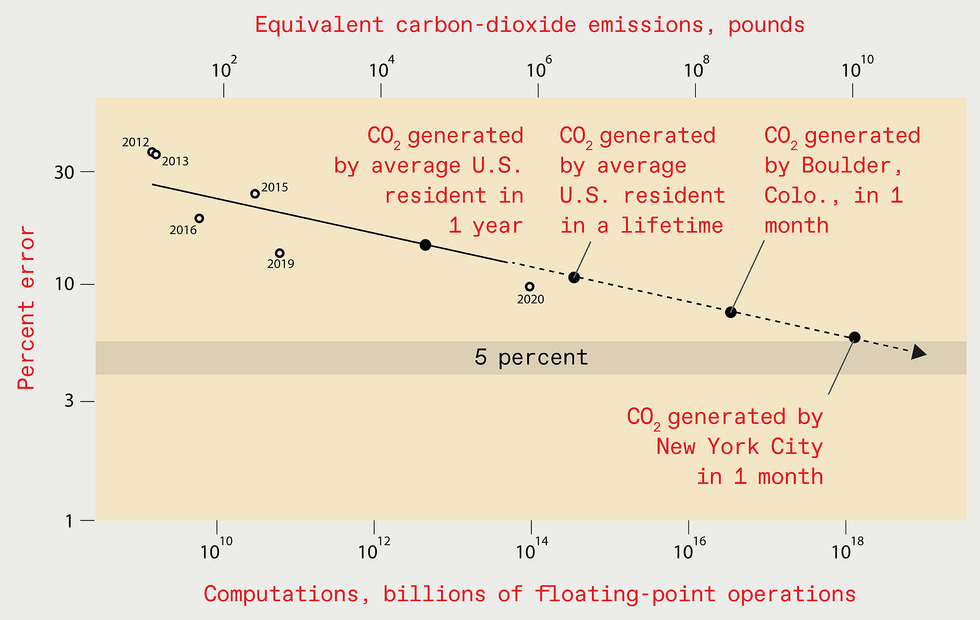

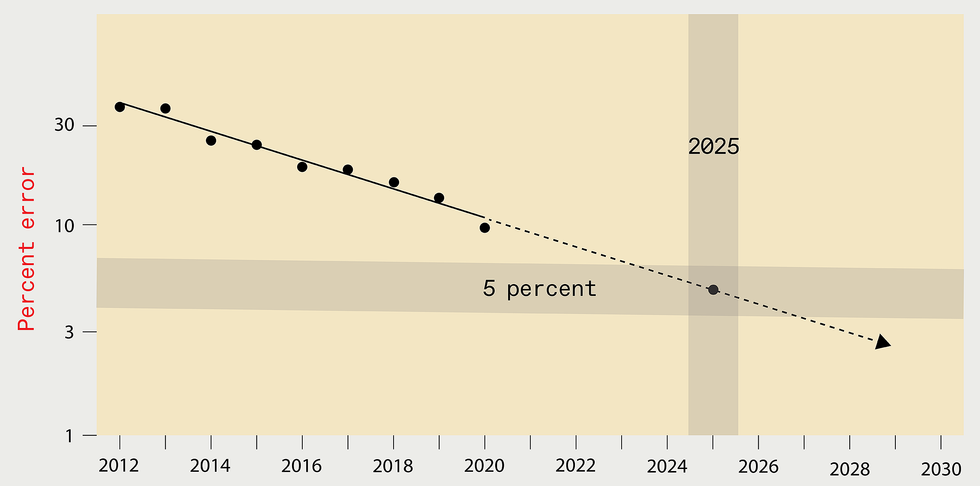

Extrapolating the gains of current several years may possibly propose that by

2025 the error stage in the ideal deep-learning systems intended

for recognizing objects in the ImageNet details established really should be

decreased to just five percent [top]. But the computing methods and

electricity necessary to teach this sort of a long run technique would be massive,

primary to the emission of as much carbon dioxide as New York

City generates in just one month [base].

Resource: N.C. THOMPSON, K. GREENEWALD, K. LEE, G.F. MANSO

The to start with section is true of all statistical products: To make improvements to performance by a variable of

k, at least k2 extra details factors should be used to teach the model. The second section of the computational value comes explicitly from overparameterization. As soon as accounted for, this yields a overall computational value for enhancement of at least k4. That minimal 4 in the exponent is very high priced: A ten-fold enhancement, for case in point, would demand at least a ten,000-fold improve in computation.

To make the overall flexibility-computation trade-off extra vivid, contemplate a scenario the place you are hoping to predict irrespective of whether a patient’s X-ray reveals most cancers. Suppose further that the true remedy can be found if you evaluate a hundred facts in the X-ray (frequently termed variables or characteristics). The problem is that we don’t know forward of time which variables are vital, and there could be a very huge pool of applicant variables to contemplate.

The professional-technique approach to this trouble would be to have persons who are well-informed in radiology and oncology specify the variables they imagine are vital, permitting the technique to analyze only those people. The adaptable-technique approach is to check as many of the variables as doable and permit the technique determine out on its individual which are vital, demanding extra details and incurring much better computational expenses in the system.

Types for which gurus have proven the applicable variables are able to learn quickly what values do the job ideal for those people variables, performing so with constrained amounts of computation—which is why they were so popular early on. But their capability to learn stalls if an professional hasn’t accurately specified all the variables that really should be included in the model. In contrast, adaptable products like deep learning are less economical, having vastly extra computation to match the performance of professional products. But, with adequate computation (and details), adaptable products can outperform ones for which gurus have tried to specify the applicable variables.

Evidently, you can get improved performance from deep learning if you use extra computing ability to construct greater products and teach them with extra details. But how high priced will this computational burden come to be? Will expenses come to be sufficiently high that they hinder development?

To remedy these queries in a concrete way,

we just lately gathered details from extra than one,000 analysis papers on deep learning, spanning the parts of picture classification, object detection, dilemma answering, named-entity recognition, and machine translation. Listed here, we will only explore picture classification in element, but the classes apply broadly.

Above the several years, decreasing picture-classification glitches has come with an massive enlargement in computational burden. For case in point, in 2012

AlexNet, the model that to start with confirmed the ability of training deep-learning systems on graphics processing units (GPUs), was trained for five to 6 times applying two GPUs. By 2018, a further model, NASNet-A, had minimize the error level of AlexNet in half, but it used extra than one,000 periods as much computing to accomplish this.

Our assessment of this phenomenon also authorized us to compare what’s basically transpired with theoretical anticipations. Concept tells us that computing requires to scale with at least the fourth ability of the enhancement in performance. In exercise, the true necessities have scaled with at least the

ninth ability.

This ninth ability means that to halve the error level, you can be expecting to will need extra than 500 periods the computational methods. Which is a devastatingly high value. There may well be a silver lining in this article, on the other hand. The hole amongst what’s transpired in exercise and what idea predicts may possibly suggest that there are even now undiscovered algorithmic advancements that could considerably make improvements to the performance of deep learning.

To halve the error level, you can be expecting to will need extra than 500 periods the computational methods.

As we mentioned, Moore’s Law and other components advancements have furnished huge improves in chip performance. Does this suggest that the escalation in computing necessities will not make any difference? However, no. Of the one,000-fold change in the computing used by AlexNet and NASNet-A, only a 6-fold enhancement came from improved components the rest came from applying extra processors or running them more time, incurring better expenses.

Possessing estimated the computational value-performance curve for picture recognition, we can use it to estimate how much computation would be needed to get to even extra spectacular performance benchmarks in the long run. For case in point, attaining a five percent error level would demand ten

19 billion floating-stage operations.

Significant do the job by students at the University of Massachusetts Amherst permits us to recognize the economic value and carbon emissions implied by this computational burden. The responses are grim: Instruction this sort of a model would value US $a hundred billion and would generate as much carbon emissions as New York City does in a month. And if we estimate the computational burden of a one percent error level, the final results are significantly even worse.

Is extrapolating out so many orders of magnitude a fair matter to do? Sure and no. Absolutely, it is vital to recognize that the predictions aren’t precise, while with this sort of eye-watering final results, they don’t will need to be to convey the in general concept of unsustainability. Extrapolating this way

would be unreasonable if we assumed that researchers would abide by this trajectory all the way to this sort of an serious final result. We don’t. Faced with skyrocketing expenses, researchers will possibly have to come up with extra economical ways to fix these difficulties, or they will abandon doing the job on these difficulties and development will languish.

On the other hand, extrapolating our final results is not only fair but also vital, because it conveys the magnitude of the problem forward. The primary edge of this trouble is presently turning into clear. When Google subsidiary

DeepMind trained its technique to engage in Go, it was estimated to have value $35 million. When DeepMind’s researchers intended a technique to engage in the StarCraft II movie match, they purposefully didn’t try out a number of ways of architecting an vital ingredient, because the training value would have been far too high.

At

OpenAI, an vital machine-learning imagine tank, researchers just lately intended and trained a much-lauded deep-learning language technique termed GPT-3 at the value of extra than $4 million. Even while they made a error when they applied the technique, they didn’t take care of it, outlining basically in a health supplement to their scholarly publication that “owing to the value of training, it wasn’t feasible to retrain the model.”

Even corporations outdoors the tech business are now starting off to shy absent from the computational cost of deep learning. A huge European supermarket chain just lately abandoned a deep-learning-primarily based technique that markedly improved its capability to predict which products would be obtained. The business executives dropped that try because they judged that the value of training and running the technique would be far too high.

Faced with rising economic and environmental expenses, the deep-learning community will will need to uncover ways to improve performance without producing computing requires to go through the roof. If they don’t, development will stagnate. But don’t despair but: Lots is remaining completed to deal with this problem.

One tactic is to use processors intended specially to be economical for deep-learning calculations. This approach was widely used over the last ten years, as CPUs gave way to GPUs and, in some scenarios, industry-programmable gate arrays and software-certain ICs (like Google’s

Tensor Processing Device). Fundamentally, all of these ways sacrifice the generality of the computing platform for the performance of elevated specialization. But this sort of specialization faces diminishing returns. So more time-expression gains will demand adopting wholly different components frameworks—perhaps components that is primarily based on analog, neuromorphic, optical, or quantum systems. Consequently considerably, on the other hand, these wholly different components frameworks have but to have much impression.

We should possibly adapt how we do deep learning or experience a long run of much slower development.

A different approach to decreasing the computational burden focuses on generating neural networks that, when applied, are lesser. This tactic lowers the value every single time you use them, but it frequently improves the training value (what we have described so considerably in this short article). Which of these expenses issues most relies upon on the situation. For a widely used model, running expenses are the most significant ingredient of the overall sum invested. For other models—for case in point, those people that often will need to be retrained— training expenses may well dominate. In possibly situation, the overall value should be much larger than just the training on its individual. So if the training expenses are far too high, as we have shown, then the overall expenses will be, far too.

And that’s the problem with the several strategies that have been used to make implementation lesser: They don’t lower training expenses adequate. For case in point, just one permits for training a huge community but penalizes complexity through training. A different will involve training a huge community and then “prunes” absent unimportant connections. But a further finds as economical an architecture as doable by optimizing across many models—something termed neural-architecture lookup. While every single of these approaches can supply major gains for implementation, the results on training are muted—certainly not adequate to deal with the issues we see in our details. And in many scenarios they make the training expenses better.

One up-and-coming approach that could lower training expenses goes by the name meta-learning. The idea is that the technique learns on a variety of details and then can be utilized in many parts. For case in point, fairly than building different systems to recognize pet dogs in pictures, cats in pictures, and vehicles in pictures, a solitary technique could be trained on all of them and used a number of periods.

However, current do the job by

Andrei Barbu of MIT has discovered how tricky meta-learning can be. He and his coauthors confirmed that even smaller dissimilarities amongst the first details and the place you want to use it can seriously degrade performance. They demonstrated that current picture-recognition systems rely seriously on issues like irrespective of whether the object is photographed at a unique angle or in a unique pose. So even the straightforward activity of recognizing the exact same objects in different poses triggers the accuracy of the technique to be approximately halved.

Benjamin Recht of the University of California, Berkeley, and others made this stage even extra starkly, displaying that even with novel details sets purposely constructed to mimic the first training details, performance drops by extra than ten percent. If even smaller changes in details induce huge performance drops, the details needed for a extensive meta-learning technique may possibly be massive. So the excellent assure of meta-learning stays considerably from remaining realized.

A different doable tactic to evade the computational limits of deep learning would be to move to other, potentially as-but-undiscovered or underappreciated types of machine learning. As we described, machine-learning systems constructed all around the perception of gurus can be much extra computationally economical, but their performance are unable to get to the exact same heights as deep-learning systems if those people gurus cannot distinguish all the contributing things.

Neuro-symbolic methods and other approaches are remaining developed to blend the ability of professional expertise and reasoning with the overall flexibility frequently found in neural networks.

Like the situation that Rosenblatt faced at the dawn of neural networks, deep learning is now turning into constrained by the readily available computational applications. Faced with computational scaling that would be economically and environmentally ruinous, we should possibly adapt how we do deep learning or experience a long run of much slower development. Evidently, adaptation is preferable. A intelligent breakthrough may possibly uncover a way to make deep learning extra economical or pc components extra impressive, which would enable us to go on to use these terribly adaptable products. If not, the pendulum will likely swing back again toward relying extra on gurus to determine what requires to be learned.

From Your Website Articles

Related Articles All around the Web